La communauté scientifique est en effervescence à l’approche de la mise en place de la « loi de programmation pluriannuelle de la recherche » (LPPR). Au programme, entre autres : un renforcement de la mise en compétition des structures et des personnes par l’intermédiaire d’appels à projets, un renforcement de la précarisation (déjà très importante) par l’implémentation de la « tenure track », c’est-à-dire CDD de 5-7 ans pouvant (ou pas) mener à une titularisation, un accroissement de l’évaluation bureaucratique (c’est-à-dire par des objectifs chiffrés) des institutions et des personnes, une différenciation des rémunérations et des conditions de travail notamment par une politique de primes généralisée, un temps d’enseignement des enseignants-chercheurs non plus statutaire (192h équivalent-TD) mais « négocié » avec la direction, un renforcement de la recherche « partenariale » (c’est-à-dire au profit d’une entreprise) au détriment de la recherche fondamentale, et, globalement, un affaiblissement considérable de l’autonomie scientifique et universitaire.

Naturellement, ce projet suscite une inquiétude considérable dans la communauté académique, et de nombreuses critiques ont déjà été formulées, par le biais des institutions (qui ignorent ces critiques) mais également dans la presse. En passant : je parlerai par la suite de projet, de politique ou d’idéologie plutôt que de loi, car il est vraisemblable que l’essentiel du projet se passe du débat parlementaire. Les « tenure-tracks » par exemple, qui signent la mort annoncée des statuts de chargé de recherche et maître de conférences, ont déjà été mis en place cette année avant même la discussion de la loi, grâce à une disposition législative elle-même non discutée au Parlement, le CDI de projet (passée par ordonnances en 2017).

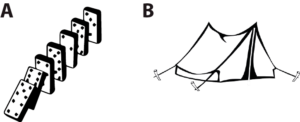

Beaucoup de choses ont été dites et écrites, mais les discussions tournent généralement autour de points spécifiques, sur chacune des mesures prises individuellement, et donc de manière réductionniste. Par exemple, concernant l’ANR qui finance désormais les projets de recherche, une partie de la communauté se focalise sur le faible taux de succès (10-15% selon les années), source en effet d’un énorme gâchis. Des voix s’élèvent donc pour augmenter le budget de l’ANR de façon à augmenter ce taux de succès. Or l’ANR finance en grande partie des postdocs, et augmenter son budget va donc mécaniquement entraîner une précarisation, précarisation qui suscite pourtant l’inquiétude de cette même communauté. Concernant la tenure track, si la communauté y est généralement opposée, certains y voient une évolution potentiellement positive « si c’est bien fait », en permettant par exemple des rémunérations plus attractives, comme le pouvoir l’annonce. C’est oublier que ce qui permet une augmentation des rémunérations, c’est soit une augmentation du budget (qui pourrait se faire sans précarisation) soit une diminution du nombre de recrutements. D’autres se disent que la tenure track (CDD puis titularisation conditionnelle) est un progrès par rapport au postdoc (CDD puis concours). C’est négliger deux choses : d’une, que la tenure track n’exclut pas le postdoc, qui reste la norme dans tous les pays qui implémentent la tenure track ; d’autre part, que les chances de titularisation dépendent non des mots employés par les autorités pour faire la publicité de la réforme (« engagement moral »), mais du budget rapporté au nombre de candidats. Etant donné que le concours CNRS, pour prendre un exemple, a un taux de succès de 4%, de deux choses l’une : soit la tenure track va avoir un taux de sélection comparable, auquel cas la différence principale avec le système actuel sera la précarité du contrat ; soit le taux de titularisation sera comparable, auquel cas l’effet principal sera de reculer de 5-7 ans l’âge de la sélection pour un poste stable ; ou bien sûr, le plus probable, un intermédiaire : taux de sélection de 8%, taux de titularisation de 50%.

Enfin concernant les « CDIs de projet », c’est-à-dire une mesure essentiellement technique permettant de mettre en place des CDDs plus longs que ceux autorisés par la loi, nombreux sont ceux, en particulier en biologie, qui voient cette mesure d’un bon œil. La raison est la loi Sauvadet censée résorber la précarité, qui impose à l’employeur de titulariser les personnels en CDD depuis plusieurs années. Mais comme l’Etat ne veut pas titulariser, cela signifie que les institutions refusent de prolonger les CDDs au-delà d’une certaine limite. Cela est perçu à juste titre comme un gâchis par de nombreux chercheurs, car il se produit souvent une situation dans laquelle un laboratoire dispose des fonds pour prolonger le contrat d’un postdoc expérimenté, mais est contrainte administrativement de recruter à la place un postdoc inexpérimenté. Cependant, de manière plus globale, l’effet de la mise en place de CDIs de projet est mécaniquement un allongement de la durée des postdocs, donc une titularisation (ou non) plus tardive, assortie éventuellement d’une diminution du nombre de postdocs - à moins que le taux de succès de l’ANR augmente par une augmentation de budget.

En résumé, une analyse réductionniste ne permet pas de former une critique cohérente. Elle tend à naturaliser le fonctionnement des institutions, c’est-à-dire à considérer par exemple que le financement par projet de la recherche est une sorte de loi de la nature plutôt qu’une construction sociale (d’où la référence constante aux « standards internationaux »). Or il s’agit bien entendu de politiques choisies, et dans le cas de la France assez récemment. C’est pourquoi il me semble important de prendre un peu de recul pour analyser cette nouvelle politique de manière globale, y compris dans le contexte plus large des politiques publiques.

- L’idéologie générale : le nouveau management public

Antoine Petit, PDG du CNRS, a brillamment résumé l’inspiration idéologique de la LPPR: il s’agira d’une loi « darwinienne ». Cette terminologie n’a pas manqué de susciter de vives critiques de la part de la communauté scientifique (ici et là). Il s’agit de mettre en compétition des institutions et des personnes sur la base d’objectifs décidés par l’Etat, et « que le meilleur gagne ». Cette référence n’est absolument pas anecdotique, contrairement à ce que Mme Vidal et M. Petit ont essayé d’expliquer de manière peu convaincante par la suite. Il s’agit d’une forme de gestion publique qui n’est ni improvisée ni spécifique à la recherche, et qui a été théorisée sous le nom de nouveau management public. Il s’agit non plus d’organiser les services publics par la mise en place raisonnée d’infrastructures publiques, de manière spécifique à chaque mission (une approche perçue comme « soviétique » et donc inefficace), mais en mettant en compétition diverses institutions, qui sont « libres » de mettre en place les moyens qu’ils jugent nécessaires pour accomplir des objectifs chiffrés fixés par l’Etat. Ces objectifs peuvent être par exemple la position dans un classement international des universités, ou la durée moyenne de séjour dans les hôpitaux. C’est le sens du mot « autonomie » dans l’« autonomie des universités » instaurée par Nicolas Sarkozy en 2007. Naturellement, il ne s’agit pas d’autonomie scientifique, puisque celle-ci suppose précisément un financement inconditionnel (c’est-à-dire emploi garanti et budget récurrent), ni d’autonomie financière puisque les financements restent versés par l’Etat, sous condition d’objectifs fixés par l’Etat. Il s’agit d’une autonomie de méthode, pour accomplir des objectifs fixés préalablement par l’Etat.

Naturellement, cette mise en compétition au niveau des structures (e.g. universités) se décline de manière hiérarchique jusqu’à l’échelle individuelle, puisque pour survivre, la structure doit faire en sorte que les employés contribuent à la réalisation des objectifs. C’est pourquoi il est en permanence question d’instaurer une « culture du résultat » ou de l’évaluation. Cet appel à la révolution culturelle qui évoque le maoïsme est d’ailleurs tout à fait significatif, j’y reviendrai.

Cette idéologie n’est ni spécifique à la recherche, ni naturellement à la France. Son instigatrice la plus connue est Mme Thatcher, qui l’a mise en place au Royaume-Uni dans les années 80. Elle a également été mise en place par le régime nazi dans les années 1930, comme l’a montré l’historien Johann Chapoutot - j’y reviendrai. Son bilan, pour dire un euphémisme, n’est pas fameux (voir par exemple un compte rendu très récent dans Nature d’une étude dans le monde académique). Pour ce qui est de la santé, par exemple, sa mise en place en France par le biais de la tarification à l’activité (T2A) a conduit aujourd’hui à une situation dramatique - j’y reviendrai également.

- Une politique libérale ?

Il est important de bien situer cette idéologie sur l’échiquier politique. En effet, elle est souvent perçue (et présentée) comme une idéologie d’inspiration libérale. Il est d’ailleurs tout à fait plausible que ses défenseurs la pensent ainsi de manière sincère - bien sûr dans les démocraties libérales modernes, et peut-être pas au sein du IIIe Reich qui l’implémentait également. C’est un malentendu qui est source d’une grande confusion, et qui explique son soutien électoral de la part de citoyens de sensibilité centriste - que sont une bonne partie des chercheurs.

La filiation avec la pensée libérale se fait, on l’a compris, par l’idée de l’efficience du marché. Le marché serait un outil productif efficace en cela qu’il permettrait, par la libre opération des producteurs et la sélection par les consommateurs, de faire émerger les solutions les plus efficaces. D’où l’essence « darwinienne » de ces idées (dans le sens bien sûr des croyances populaires, et non du Darwinisme en biologie qui n’est absolument pas individualiste). Cette efficience émergerait naturellement de la libre opération des marchés dans un contexte marchand (j’invite néanmoins le lecteur à lire ce que pensent les anthropologues du présupposé naturaliste des économistes sur la monnaie et l’échange marchand). Le libéralisme économique, par conséquent, préconise de réduire l’intervention de l’Etat au strict nécessaire, et peut être associé (ou pas) au libéralisme politique, en particulier la protection des libertés individuelles. Le marché est vu comme un moyen optimal de distribuer l’information dans une économie - bien sûr, aucun économiste sérieux ne considère plus aujourd’hui que les marchés réels se comportent ou puissent se comporter comme les « marchés parfaits » de l’économie néoclassique (voir par exemple le travail de J. Stiglitz). Dans un contexte de guerre froide, ce type d’organisation économique se pense en opposition avec la planification soviétique, qui serait bureaucratique, « sclérosante ».

On touche ici à un problème épistémologique essentiel, sur lequel je reviendrai plus tard, qui constitue l’erreur intellectuelle fondamentale du nouveau management public. En effet, si l’on conçoit qu’un marché puisse exister naturellement dans le cadre d’échanges commerciaux où il existe une métrique objective, l’argent, ce n’est a priori pas le cas de la santé, la science, la justice ou la police. Il faut donc créer ces métriques, par exemple : la durée de séjour, le nombre de publications, le nombre de jugements, le nombre d’amendes. Ces métriques et les objectifs qui y sont associés n’existant pas de manière naturelle (peut-on quantifier la vérité, ou la justice ?), ils doivent être créés par l’Etat. Cela suppose donc la création d’une bureaucratie d’Etat.

On arrive donc à un étonnant paradoxe : alors que le libéralisme est un courant de pensée fondamentalement anti-bureaucratique, on est ici en présence d’une doctrine qui préconise la mise en place d’une bureaucratie d’Etat. C’est en effet une constatation et une plainte récurrentes de tous les chercheurs et universitaires français depuis la mise en place, ces quinze dernières années, de politiques de ce type : alors même qu’il s’agissait officiellement de « moderniser » un appareil d’enseignement et de recherche jugé bureaucratique, « sclérosé », voire proto-soviétique (en particulier dans le cas du CNRS), de l’avis de tous la bureaucratie a au contraire considérablement envahi la vie quotidienne de ces professions, par le biais des multiples appels d’offres et procédures d’évaluation, mais également des nouvelles structures administratives qui ont été créées pour organiser le marché de l’excellence scientifique (HCERES, pôles d’excellence, fusion d’universités, IDEX/LABEX/EQUIPEX/IHU, etc). Ceci se manifeste également dans l’emploi public, puisqu’alors même que le recrutement de chercheurs, d’enseignants-chercheurs et de techniciens est en forte baisse, l’emploi administratif et donc le coût de gestion ont littéralement explosé au sein de l’ANR, agence chargée d’organiser l’évaluation des appels d’offre en recherche. De même, dans l’hôpital public, une plainte récurrente des médecins concerne le temps considérable qu’ils doivent désormais passer au « codage », c’est-à-dire à assigner à chaque patient et chaque acte des codes issus d’une nomenclature bureaucratique. Cette bureaucratisation n’est pas un accident, comme je l’indiquais précédemment. Elle a été par ailleurs décrite dans le contexte plus large du néolibéralisme par les sciences sociales (voir par exemple le travail de D. Graeber et de B. Hibou).

Manifestement, cette idéologie prétendument libérale est une idéologie bureaucratique, ce qui n’est déjà pas rien. Deuxièmement, le rôle de l’Etat n’est plus du tout d’assurer les libertés individuelles. En effet, dans un marché classique, d’échange marchand, ce qui est produit est décidé de manière autonome (en théorie du moins) par les acteurs de ce marché, c’est-à-dire en dernière analyse (en théorie encore) par les consommateurs. Il faut donc assurer la liberté individuelle de ces acteurs. Or dans le nouveau management public, ce qui est produit est décidé par l’Etat, spécifiquement par la bureaucratie mise en place par l’Etat pour fixer les objectifs, qui utilise la mise en compétition uniquement comme outil de gestion. Il ne s’agit donc absolument plus d’assurer la liberté individuelle des acteurs, qui doivent fournir ce qui leur est demandé par la bureaucratie. Ceux qui ne le font pas doivent quitter le système (exemple du tenure track). C’est ainsi qu’un des points majeurs de ces réformes, en particulier dans la recherche, est de revenir sur le statut de fonctionnaire, qui assure l’autonomie scientifique et universitaire, de façon à pouvoir soumettre les employés à la bureaucratie locale (direction d’université) ou nationale (ministère). Paradoxalement, c’est au nom du libéralisme que l’on attaque les libertés académiques.

On est donc en présence d’une idéologie qui est fondamentalement bureaucratique, et qui n’entend pas garantir les libertés individuelles. Pour ce qui est du libéralisme politique, on ne peut que constater également qu’il n’y a pas grand-chose de libéral dans la pratique actuelle des institutions politiques (avec par exemple le recours généralisé aux ordonnances pour passer des réformes majeures), dans la répression violente des opposants politiques, dans l’intimidation des journalistes, dans la volonté de censurer les réseaux sociaux, dans la restriction de la liberté d’expression des étudiants et universitaires, ou encore dans le déploiement de la reconnaissance faciale automatique par l’Etat.

Que reste-t-il donc du libéralisme dans cette idéologie ? Peu de choses en effet. C’est la raison pour laquelle les historiens, sociologues et philosophes parlent de néolibéralisme (à ne pas confondre avec l’ultralibéralisme), et c’est également la raison pour laquelle ses partisans refusent typiquement cette étiquette de manière véhémente, quand bien même il s’agit d’une dénomination savante, parce qu’elle indique que l’on a affaire à tout à fait autre chose que du libéralisme. Ce malentendu sur la nature prétendument libérale (au sens classique) de cette idéologie est source de grande confusion. Il explique peut-être l’opposition quasi-systématique d’une grande partie de la population à des réformes pourtant portées par des gouvernements centristes élus, y compris élus par des membres du monde académique qui se considèrent de centre gauche.

- L’obscurantisme managérial

Il existe pour les universitaires et chercheurs une raison spécifique de s’opposer à cette idéologie, en lien avec l’éthique intellectuelle qu’ils entendent défendre par leur métier. Il s’agit en effet d’une idéologie qui repose sur une argumentation d’un niveau intellectuel affligeant (suite de poncifs et de préjugés non étayés), des analogies douteuses (le marché, voire le darwinisme), et un refus de toute évaluation empirique (depuis son application, l’hôpital public est en crise grave et la recherche française tombe dans les classements mis en avant par les autorités elles-mêmes). Autrement dit, une idéologie obscurantiste tendant vers le sectaire. Les discours portant cette idéologie font rire toute la communauté académique tellement ils sont caricaturaux de novlangue : tout doit être « excellent », il faut faire « sauter des verrous », favoriser « l’innovation disruptive ». Il faut consulter l’hilarant site de l’Agence Nationale de l’Excellence Scientifique, parodiant l’Agence Nationale de la Recherche.

Il vaut mieux en rire, sans doute, mais ces discours n’en sont pas moins affligeants. L’argumentation que l’on retrouve dans les rapports officiels et les contrats d’objectifs des différentes institutions ne sont malheureusement pas d’un niveau intellectuel plus élevé. Il s’agirait par exemple de s’aligner sur les « standards internationaux » - un mimétisme censé favoriser l’innovation, ce qui est assez croustillant. On ne s’est visiblement pas posé la question de dresser un bilan de ces politiques menées à l’étranger ou en France, qui n’est pourtant pas brillant. Quelle est donc la justification en ce qui concerne l’enseignement supérieur et la recherche ? On peut la paraphraser ainsi : puisque ces politiques ont été implémentées dans les pays anglo-saxons, en particulier les Etats-Unis, et que les Etats-Unis sont leaders dans tous les classements, c’est donc que ces politiques sont efficaces : il faut donc s’empresser de copier ce modèle.

N’importe quel scientifique devrait bondir face à une telle argumentation qui mélange corrélation et causation de la manière la plus grossière qui soit. On aurait pu se dire : tiens, les Etats-Unis étant politiquement hégémoniques, toute la science se fait dans leur langue maternelle, dans des revues toutes anglo-saxonnes, et qui plus est avec des moyens sans commune mesure avec les budgets français. Peut-être que ces facteurs ont une petite influence ? On aurait pu se dire : tiens, peut-être que les grandes universités américaines sont mondialement connues au-delà du monde académique, alors que l’Ecole Polytechnique pas tellement, parce que les Etats-Unis sont culturellement hégémoniques, de sorte que Legally Blonde a été tourné à Harvard et pas à Assas ? Auquel cas il ne serait peut-être pas si pertinent de faire tellement d’efforts à renommer toutes les universités parisiennes en déclinant la marque Sorbonne ? On aurait pu se dire encore : tiens, peut-être que les Etats-Unis ont un excellent vivier scientifique précisément parce que des pays comme la France imposent à tous leurs meilleurs docteurs de passer plusieurs années aux Etats-Unis pour se former parmi les meilleurs, se « faire un réseau », apprendre l’anglais, comme condition pour une éventuelle titularisation dans leur pays d’origine ? Mais, on ne s’est rien dit de tout ça. Ce qui marche, c’est le « marché » et la compétition généralisée. Point.

Sur certains points, il existe des études scientifiques qui vont au-delà des sophismes managériaux. Par exemple, est-il plus efficace de concentrer les ressources sur les « meilleurs » ? Même si l’on adopte les critères bureaucratiques promus par l’idéologie managériale (nombre de publications, etc), la réponse est, selon plusieurs études sur des données différentes : non. Il est plus efficace de diversifier les financements que de les concentrer en créant de grosses structures (Fortin & Curie, 2013 ; Cook et al., 2015 ; Mongeon et al., 2016 ; Wahls, 2018). D’autres études montrent que la sélection sur projet n’est que faiblement prédictive de l’impact scientifique, tel que mesuré par ces mêmes politiques (Fang et al., 2016 ; Scheiner & Bouchie, 2013). Manifestement, les décideurs politiques de l’enseignement supérieur et de la recherche ne sont pas intéressés par l’évaluation empirique de leurs théories. Il est donc approprié de qualifier cette attitude d’obscurantiste. Et on ne peut pas, on ne doit pas, en tant que scientifiques (au sens large), respecter l’obscurantisme, c’est-à-dire le mépris de l’éthique intellectuelle qui fait l’essence de notre métier.

Il est important de noter que ces politiques se présentent volontiers comme « rationnelles » - il ne s’agirait finalement que de dérouler des arguments peut-être déplaisants mais « logiques ». Ce mot, « rationnel », devrait être un signal d’alarme pour tous les intellectuels. Car un intellectuel doit savoir que la logique, la raison, se déploient nécessairement au sein d’un certain modèle du monde. Par conséquent, la vérité d’un raisonnement est conditionnée à la pertinence empirique et théorique de ce modèle. C’est pourquoi l’essence de la science (au sens large) est le débat argumenté, documenté et honnête, c’est-à-dire explicitant les hypothèses, épaulé par les institutions sociales adéquates (comme la revue par les pairs). Passer sous silence cet aspect, et prétendre qu’il existerait une Vérité objective, c’est faire preuve de sectarisme, et en effet d’obscurantisme puisque cela suppose d’ignorer délibérément toute production savante contraire à la doxa.

L’enjeu pour la communauté académique, donc, n’est pas simplement d’aménager à la marge des réformes prétendument démocratiques (augmenter le taux de succès de l’ANR) ou de les « négocier » (on accepte la précarisation en échange de primes), mais de s’opposer à l’obscurantisme qui fonde ces projets, et éventuellement de penser autre chose à la place.

- Pensée bureaucratique et idéologies sectaires

Il faut rappeler ici qu’avant la guerre froide, la bureaucratie a volontiers été présentée sur un mode laudatif. Le sociologue Max Weber, par exemple, considérait en se référant à la bureaucratie industrielle qu’il s’agissait là d’un mode particulièrement efficace de production, rationnel. De même la planification soviétique était pensée comme une approche scientifique, rationnelle, de la production (algorithmique, pourrait-on peut-être dire en langage moderne). La bureaucratie instituée par le nouveau management public n’échappe pas à ce type de discours. Je reviendrai plus tard sur les raisons fondamentales pour laquelle l’approche bureaucratique ne peut pas fonctionner pour des activités telles que la science (ou la santé). Un point intéressant relevé par l’historien Johann Chapoutot est que les politiques similaires au nouveau management public menées par le IIIe Reich étaient, contrairement à l’image d’efficacité que l’on en a à cause de la propagande nazie, en réalité tout à fait inefficaces, chaotiques.

J’ai mentionné rapidement les similarités idéologiques, naturellement sur certains aspects uniquement, avec la politique nazie et avec la bureaucratie soviétique. J’ai également mentionné en passant l’inquiétante insistance des dirigeants politiques à instaurer une « culture du résultat », c’est-à-dire qu’il s’agit non seulement d’instaurer de nouvelles structures étatiques, mais également de transformer les individus pour qu’ils embrassent la nouvelle orientation politique. Ce n’est pas sans rappeler la « révolution culturelle » maoïste, visant à éradiquer les valeurs culturelles traditionnelles (naturellement, avec des moyens violents que je ne prétends pas comparer à l’action publique actuelle).

Pour qu’il n’y ait pas de malentendu, il ne s’agit pas de dire que la théorie du nouveau management public est une idéologie nazie, ou stalinienne, ou maoïste (celles-ci étant de toutes façons incompatibles). D’ailleurs, il semble plausible que M. Petit, lorsqu’il considère maladroitement que la loi doit être inégalitaire et « darwinienne », ignore qu’il formule là la doctrine du darwinisme social, un fondement essentiel du néolibéralisme comme l’a montré la philosophe Barbara Stiegler, mais également un des fondements idéologiques du nazisme. Il n’est pas question ici de mettre sur le même plan les horreurs commises au nom de ces courants de pensée avec la destruction des constructions sociales commises au nom du nouveau management public. Néanmoins, sur un plan intellectuel, les points communs avec ces idéologies sectaires doivent nous interroger. Il existe bien un recouvrement idéologique partiel dont on peut tout à fait comprendre les aspects problématiques. Par exemple, la promotion de la compétition généralisée des individus dans un but supérieur, les individus eux-mêmes n’ayant de valeur que dans la mesure où ils contribuent à la puissance de la Nation (ainsi la référence constante à la compétition internationale, aux classements internationaux, aux « stars » qu’il faudrait attirer) ; position qui rend inopérant tout argument de type social (par exemple la précarisation extrême du métier de chercheur), mais aussi scientifique (c’est-à-dire que le but premier de la science est la recherche de la vérité et non la production). Il ne s’agit donc pas de nazisme, de maoïsme ou stalinisme, mais il reviendra néanmoins aux défenseurs de ce projet politique d’argumenter que « tout n’est pas à jeter » dans ces grandes idéologies sectaires. Que toute activité sociale doit contribuer exclusivement à la production de la Nation. Que les opinions et attitudes des gens doivent être transformées pour le bien de la Nation. Que la bureaucratie, c’est efficace et rationnel.

En résumé, il ne faut pas passer à côté des enjeux de la transformation en cours de l’enseignement supérieur et de la recherche. Il ne s’agit pas de discuter du taux optimal de succès à l’ANR mais de contester la pertinence du projet comme mode essentiel d’organisation de la recherche. Il ne s’agit pas de discuter des métriques les plus pertinentes pour évaluer les personnes, mais de contester l’organisation profondément bureaucratique que cette « culture de l’évaluation » suppose, et de réaffirmer l’autonomie académique nécessaire à l’exercice de la recherche. Il ne s’agit pas de savoir comment au mieux renforcer l’« attractivité internationale » du métier, mais de contester l’idée d’un progrès scientifique fondé essentiellement sur la compétition et la sélection - plutôt que, par exemple, sur la coopération, l’éducation et le partage. Il ne s’agit pas, enfin, de se demander comment favoriser au mieux l’« innovation de rupture », mais de contester l’idée selon laquelle l’activité scientifique doit être subordonnée à la production marchande.

Il s’agit donc en premier lieu de dénoncer l’obscurantisme et le sectarisme qui fondent ces réformes, et non de les négocier en acceptant leurs présupposés. En second lieu, il s’agira de penser les questions centrales de science et d’université dans un cadre intellectuel libéré du sectarisme managérial.

Toutes ces remarques sont finalement assez peu spécifiques de l’enseignement supérieur et de la recherche. Par la suite, je tenterai de rentrer dans une discussion plus spécifique, en particulier sur la nécessaire autonomie de la science, à mettre en regard avec la culture du projet.

Deuxième partie: Préjugés psychologiques de l’idéologie managériale